One of the quintessential tasks of open data is sentiment analysis. A very common example of this is using tweets from Twitter’s streaming API. In this article I’m going to show you how to capture Twitter data live, make sense of it and do some basic plots based on the NLTK sentiment analysis library.

What is sentiment analysis?

The result of sentiment analysis is as it sounds – it returns an estimation of whether a piece of text is generally happy, neutral, or sad. The magic behind this is a Python library known as NLTK – the Natural Language Toolkit. The smart people that wrote this package took what is known about Natural Language Processing in the literature and have packaged it for dummies like me to use. In short, it has a database of commonly used positive and negative words that it checks against and does a basic vote count – positives are 1 and negatives are -1, with the final result being positive or negative. You can get really smart about how exactly you build the database, but in this article I’m just going to stick with the stock library that it comes with.

The result of sentiment analysis is as it sounds – it returns an estimation of whether a piece of text is generally happy, neutral, or sad. The magic behind this is a Python library known as NLTK – the Natural Language Toolkit. The smart people that wrote this package took what is known about Natural Language Processing in the literature and have packaged it for dummies like me to use. In short, it has a database of commonly used positive and negative words that it checks against and does a basic vote count – positives are 1 and negatives are -1, with the final result being positive or negative. You can get really smart about how exactly you build the database, but in this article I’m just going to stick with the stock library that it comes with.

Asking politely for your data

Twitter is really open with their data, and it’s worth being nice in return. That means telling them who you are before you start crawling through their servers. Thankfully, they’ve made this really easy as well.

Surf over to the Twitter Apps site, sign in (or create an account if you need to, you luddite) and click on the ‘Create new app’ button. Don’t freak out – I know you’re not an app developer! We just need to do this to create an API key. Now click on the app you just created, then on the ‘Keys and Access Tokens’ tab. You’ll see four strings of letters – Your consumer key, consumer secret, access key ad access secret. Copy and paste these and store them somewhere only you can get to – off line on your local drive. If you make these public (by publishing them on github for example) you’ll have to disable them immediately and get new ones. Don’t underestimate how much a hacker with your key can completely screw you and Twitter and everyone on it – with you taking all the blame.

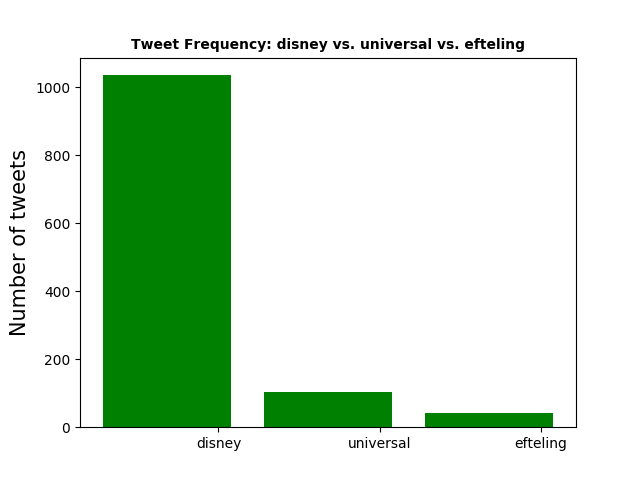

Now the serious, scary stuff is over we can get to streaming some data! The first thing we’ll need to do is create a file that captures the tweets we’re interested in – in our case anything mentioning Disney, Universal or Efteling. I expect that there’ll be a lot more for Disney and Universal given they have multiple parks globally, but I’m kind of interested to see how the Efteling tweets do just smashing them into the NLTK work flow.

Here’s the Python code you’ll need to start streaming your tweets:

# I adapted all this stuff from http://adilmoujahid.com/posts/2014/07/twitter-analytics/ - check out Adil's blog if you get a chance! #Import the necessary methods from tweepy library import re from tweepy.streaming import StreamListener from tweepy import OAuthHandler from tweepy import Stream #Variables that contains the user credentials to access Twitter API access_token = "XXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXX" access_token_secret = "XXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXX" consumer_key = "XXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXX" consumer_secret = "XXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXX" #This is a basic listener that just prints received tweets to stdout. class StdOutListener(StreamListener): def on_data(self, data): print data return True def on_error(self, status): print status if __name__ == '__main__': #This handles Twitter authentification and the connection to Twitter Streaming API l = StdOutListener() auth = OAuthHandler(consumer_key, consumer_secret) auth.set_access_token(access_token, access_token_secret) stream = Stream(auth, l) #This line filter Twitter Streams to capture data by the keywords commonly used in amusement park tweets. stream.filter(track= [ "#Disneyland", "#universalstudios", "#universalstudiosFlorida", "#UniversalStudiosFlorida", "#universalstudioslorida", "#magickingdom", "#Epcot","#EPCOT","#epcot", "#animalkingdom", "#AnimalKingdom", "#disneyworld", "#DisneyWorld", "Disney's Hollywood Studios", "#Efteling", "#efteling", "De Efteling", "Universal Studios Japan", "#WDW", "#dubaiparksandresorts", "#harrypotterworld", "#disneyland", "#UniversalStudios", "#waltdisneyworld", "#disneylandparis", "#tokyodisneyland", "#themepark"])

If you’d prefer, you can download this from my Github repo instead here. To be able to use it you’ll need to install the tweepy package using:

pip install tweepy

The only other thing you have to do is enter the strings you got from Twitter in your previous step and you’ll have it running. To save this to a file, you can use the terminal (cmd in windows) by running:

python theme_park_tweets.py > twitter_themeparks.txt

For a decent body of text to analyse I ran this for about 24 hours. You’ll see how much I got back for that time and can make your own judgment. When you’re done hit Ctrl-C to kill the script, then open up the file and see what you’ve got.

Yaaaay! Garble!

So you’re probably pretty excited by now – we’ve streamed data live and captured it! You’ve probably been dreaming for the last 24 hours about all the cool stuff you’re going to do with it. Then you get this:

{"created_at":"Sun May 07 17:01:41 +0000 2017","id":861264785677189

120,"id_str":"861264785677189120","text":"RT @CCC_DisneyUni: I have

n't been to #PixieHollow in awhile! Hello, #TinkerBell! #Disney #Di

sneylandResort #DLR #Disneyland\u2026 ","source":"\u003ca href=\"ht

tps:\/\/disneyduder.com\" rel=\"nofollow\"\u003eDisneyDuder\u003c\/

a\u003e","truncated":false,"in_reply_to_status_id":null,"in_reply_t

o_status_id_str":null,"in_reply_to_user_id":null,"in_reply_to_user

_id_str":null,"in_reply_to_screen_name":null,"user":{"id":467539697

0,"id_str":"4675396970","name":"Disney Dude","screen_name":"DisneyDu

der","location":"Disneyland, CA","url":null,"description":null,"pro

tected":false,"verified":false,"followers_count":1237,"friends_coun

t":18,"listed_count":479,"favourites_count":37104,"statuses_count":

37439,"created_at":"Wed Dec 30 00:41:42 +0000 2015","utc_offset":nu

ll,"time_zone":null,"geo_enabled":false,"lang":"en","contributors_e

...

So, not quite garble maybe, but still ‘not a chance’ territory. What we need is something that can make sense of all of this, cut out the junk, and arrange it how we need it for sentiment analysis.

So, not quite garble maybe, but still ‘not a chance’ territory. What we need is something that can make sense of all of this, cut out the junk, and arrange it how we need it for sentiment analysis.

To do this we’re going to employ a second Python script that you can find here. We use a bunch of other Python packages here that you might also need to install with pip – json, pandas, matplotlib, and TextBlob (which contains the NLTK libraries I mentioned before). If you don’t want to go to Github (luddite), the code you’ll need is here:

import json

import pandas as pd

import matplotlib.pyplot as plt

from textblob import TextBlob

import re

# These functions come from https://github.com/adilmoujahid/Twitter_Analytics/blob/master/analyze_tweets.py and http://www.geeksforgeeks.org/twitter-sentiment-analysis-using-python//

def extract_link(text):

"""

This function removes any links in the tweet - we'll put them back more cleanly later

"""

regex = r'https?://[^\s<>"]+|www\.[^\s<>"]+'

match = re.search(regex, text)

if match:

return match.group()

return ''

def word_in_text(word, text):

"""

Use regex to figure out which park or ride they're talking about.

I might use this in future in combination with my wikipedia scraping script.

"""

word = word.lower()

text = text.lower()

match = re.search(word, text, re.I)

if match:

return True

return False

def clean_tweet(tweet):

'''

Utility function to clean tweet text by removing links, special characters

using simple regex statements.

'''

return ' '.join(re.sub("(@[A-Za-z0-9]+)|([^0-9A-Za-z \t])|(\w+:\/\/\S+)", " ", tweet).split())

def get_tweet_sentiment(tweet):

'''

Utility function to classify sentiment of passed tweet

using textblob's sentiment method

'''

# create TextBlob object of passed tweet text

analysis = TextBlob(clean_tweet(tweet))

# set sentiment

if analysis.sentiment.polarity > 0:

return 'positive'

elif analysis.sentiment.polarity == 0:

return 'neutral'

else:

return 'negative'

# Load up the file generated from the Twitter stream capture.

# I've assumed it's loaded in a folder called data which I won't upload because git.

tweets_data_path = '../data/twitter_themeparks.txt'

tweets_data = []

tweets_file = open(tweets_data_path, "r")

for line in tweets_file:

try:

tweet = json.loads(line)

tweets_data.append(tweet)

except:

continue

# Check you've created a list that actually has a length. Huzzah!

print len(tweets_data)

# Turn the tweets_data list into a Pandas DataFrame with a wide section of True/False for which park they talk about

# (Adaped from https://github.com/adilmoujahid/Twitter_Analytics/blob/master/analyze_tweets.py)

tweets = pd.DataFrame()

tweets['user_name'] = map(lambda tweet: tweet['user']['name'] if tweet['user'] != None else None, tweets_data)

tweets['followers'] = map(lambda tweet: tweet['user']['followers_count'] if tweet['user'] != None else None, tweets_data)

tweets['text'] = map(lambda tweet: tweet['text'], tweets_data)

tweets['retweets'] = map(lambda tweet: tweet['retweet_count'], tweets_data)

tweets['disney'] = tweets['text'].apply(lambda tweet: word_in_text(r'(disney|magickingdom|epcot|WDW|animalkingdom|hollywood)', tweet))

tweets['universal'] = tweets['text'].apply(lambda tweet: word_in_text(r'(universal|potter)', tweet))

tweets['efteling'] = tweets['text'].apply(lambda tweet: word_in_text('efteling', tweet))

tweets['link'] = tweets['text'].apply(lambda tweet: extract_link(tweet))

tweets['sentiment'] = tweets['text'].apply(lambda tweet: get_tweet_sentiment(tweet))

# I want to add in a column called 'park' as well that will list which park is being talked about, and add an entry for 'unknown'

# I'm 100% sure there's a better way to do this...

park = []

for index, tweet in tweets.iterrows():

if tweet['disney']:

park.append('disney')

else:

if tweet['universal']:

park.append('universal')

else:

if tweet['efteling']:

park.append('efteling')

else:

park.append('unknown')

tweets['park'] = park

# Create a dataset that will be used in a graph of tweet count by park

parks = ['disney', 'universal', 'efteling']

tweets_by_park = [tweets['disney'].value_counts()[True], tweets['universal'].value_counts()[True], tweets['efteling'].value_counts()[True]]

x_pos = list(range(len(parks)))

width = 0.8

fig, ax = plt.subplots()

plt.bar(x_pos, tweets_by_park, width, alpha=1, color='g')

# Set axis labels and ticks

ax.set_ylabel('Number of tweets', fontsize=15)

ax.set_title('Tweet Frequency: disney vs. universal vs. efteling', fontsize=10, fontweight='bold')

ax.set_xticks([p + 0.4 * width for p in x_pos])

ax.set_xticklabels(parks)

# You need to write this for the graph to actually appear.

plt.show()

# Create a graph of the proportion of positive, negative and neutral tweets for each park

# I have to do two groupby's here because I want proportion within each park, not global proportions.

sent_by_park = tweets.groupby(['park', 'sentiment']).size().groupby(level = 0).transform(lambda x: x/x.sum()).unstack()

sent_by_park.plot(kind = 'bar' )

plt.title('Tweet Sentiment proportions by park')

plt.show()

The Results

If you run this in your terminal, it spits out how many tweets you recorded overall, then gives these two graphs:

So you can see from the first graph that out of the tweets I could classify with my dodgy regex skills, Disney was by far the most talked about, followed by Universal a long way. This is possibly to do with genuine popularity of the parks and the enthusiasm of their fans, but it’s probably more to do with the variety of hashtags and keywords people use for Universal compared to Disney. In retrospect I should have added a lot more of the Universal brands as keywords – things like Marvel or NBC. Efteling words didn’t really pick up much at all which isn’t really surprising – most of the tweets would be in Dutch and I really don’t know what keywords they’re using to mark them. I’m not even sure how many Dutch people use Twitter!

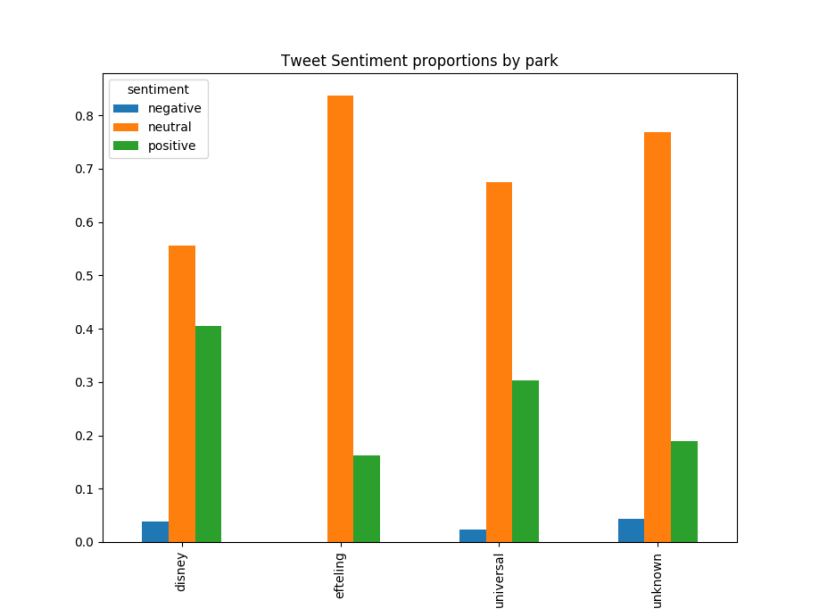

The second graph shows something relatively more interesting – Disney parks seem to come out on top in terms of the proportion of positive tweets as well. This is somewhat surprising – after all Universal and Efteling should elicit the same levels of positive sentiment – but I really don’t trust these results at this point. For one, there’s a good number of tweets I wasn’t able classify despite filtering the terms in the initial script. This is probably to do with my regex skills, but I’m happy that I’ve proved the point and done something useful in this article. Second, there’s far too many neutral tweets in the set, and while I know most tweets are purely informative (“Hey, an event happened!”) this is still too high for me to not be suspicious. When I dig into the tweets themselves I can find ones that are distinctly negative(“Two hours of park time wasted…”) that get classed as neutral. It seems that the stock NLTK library might not be all that was promised.

Stuff I’ll do next time

There’s a few things I could do here to improve my analysis. First, I need to work out what went wrong with my filtering and sorting terms that I ended up with so many unclassified tweets. There should be none, and I need to work out a way for both files to read from the same list.

Second, I should start digging into the language libraries in Python and start learning my own from collected data. This is basically linguistic machine learning, but it requires that I go through and rate the tweets myself – not really something I’m going to do. I need to figure out a way to label the data reliably then build my own libraries to learn from.

Finally, all this work could be presented a lot better in an interactive dashboard that runs off live data. I’ve had some experience with RShiny, but I don’t really want to switch software at this point as it would mean a massive slowdown in processing. Ideally I would work out a javascript solution that I can post on here.

Let me know how you go and what your results are. I’d love to see what things you apply this code to. A lot of credit goes to Adil Moujahid and Nikhil Kumar, upon whose code a lot of this is based. Check out their profiles on github when you get a chance.

Thanks for reading, see you next time 🙂